As developers working with machine learning, we constantly evaluate frameworks that help us ship high-quality models faster. PyTorch, developed by Facebook’s AI Research lab, has quickly become a top choice in the deep learning community. Its dynamic computation graph, Pythonic syntax, and rich ecosystem make it ideal for developers who value both flexibility and speed.

From my experience building and deploying AI systems in production, it offers a smooth workflow that accelerates experimentation while still supporting scalable, production-level deployment. This article explores why it is so effective, how developers can take full advantage of it, and what pitfalls to avoid when building robust AI solutions.

Why Developers Choose PyTorch

Unlike static graph-based frameworks, PyTorch enables eager execution, meaning code runs line-by-line like standard Python. This approach allows developers to test ideas and debug code more easily, especially when experimenting with model architecture or custom loss functions.

Additionally, it provides:

A clean, intuitive API

Native support for CUDA GPU acceleration

Deep integration with Python data tools like NumPy, pandas, and scikit-learn

Seamless interoperability with TorchScript and ONNX for deployment

Whether you’re a solo developer, part of a startup team, or working at scale in enterprise environments, it adapts to your workflow.

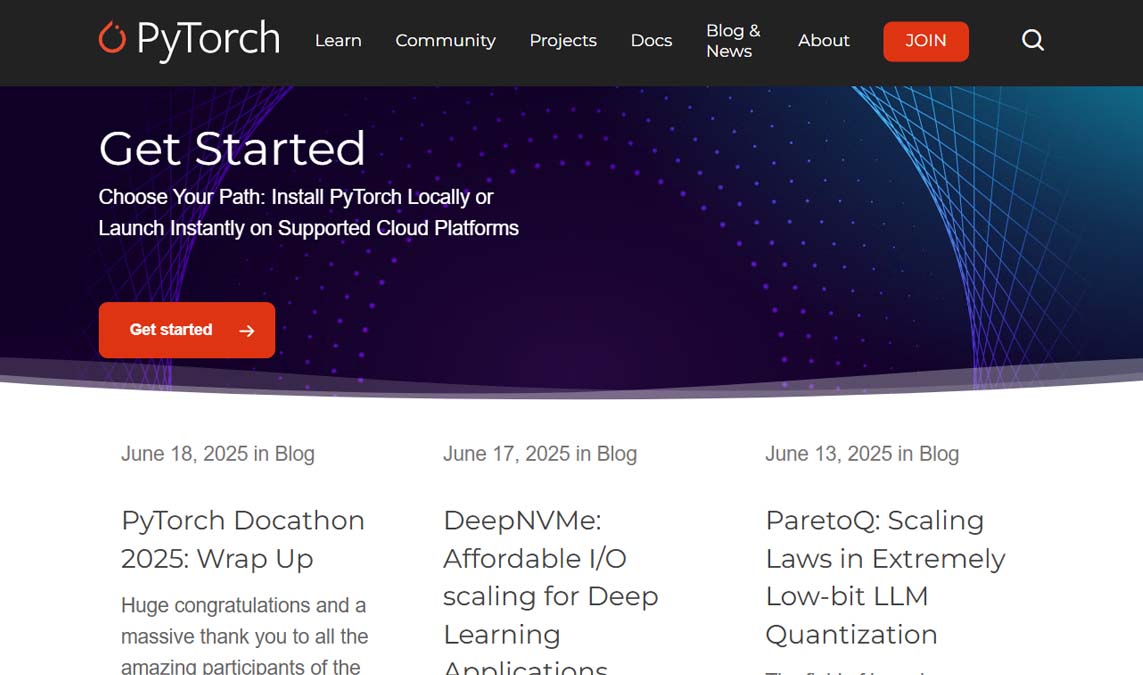

Installing PyTorch and Setting Up Your Environment

Getting started is simple. Developers can install PyTorch using pip or conda, depending on their environment:

pip install torch torchvision torchaudio

For GPU support, the PyTorch website offers an interactive selector that helps you choose the right build based on your CUDA version and operating system. I highly recommend creating a virtual environment beforehand to isolate dependencies and avoid conflicts across projects.

After installation, verify GPU support with:

import torch

print(torch.cuda.is_available())

This quick check ensures that your system is ready for high-performance training.

Building Models with PyTorch

As a developer, one of the most compelling aspects of PyTorch is the simplicity of defining models. You subclass nn.Module and define your forward pass—nothing more. Here’s a quick example:

import torch.nn as nn

class SimpleNet(nn.Module):

def __init__(self):

super(SimpleNet, self).__init__()

self.fc1 = nn.Linear(10, 64)

self.fc2 = nn.Linear(64, 1)

def forward(self, x):

x = torch.relu(self.fc1(x))

return self.fc2(x)

With this architecture in place, you can train your model using PyTorch’s flexible training loop—or use higher-level tools like PyTorch Lightning for more structured workflows.

PyTorch in Real Projects

Over the years, I’ve used PyTorch in a wide range of production-grade applications. Here are a few real-world examples where PyTorch excelled:

Computer vision: We built a real-time image classification tool for a retail client using pretrained models from

torchvision.NLP: In a chatbot project, we used transformers fine-tuned with Hugging Face and PyTorch to deliver context-aware responses.

Time-series forecasting: For a fintech app, we implemented LSTM networks to predict stock price movements.

Reinforcement learning: PyTorch was instrumental in training agents for a game AI using the OpenAI Gym environment.

These examples highlight PyTorch’s flexibility. It’s suitable for both research-driven prototypes and mission-critical applications.

Best Practices for Developers

To get the most out of PyTorch, here are some practices I’ve found helpful:

Use

DataLoaderobjects for efficient batching and shuffling during training.Leverage GPU acceleration by moving both model and tensors to

.cuda()early in your pipeline.Monitor with TensorBoardX or Weights & Biases for visualization and experiment tracking.

Avoid hardcoding shapes—use

.shapeor.view()to dynamically adapt model layers.Save and load checkpoints with

torch.save()andtorch.load()to recover from crashes or resume training.

These tips help maintain performance and avoid bugs that could appear in large-scale pipelines.

Common Pitfalls to Avoid

Even with its developer-friendly interface, PyTorch has some nuances that can trip you up:

Forgetting to zero out gradients before backpropagation can cause gradients to accumulate. Always call

optimizer.zero_grad()beforeloss.backward().Moving only the model to CUDA, but not the input tensors. Both must be on the same device.

Skipping validation during training. Use a separate validation loop to avoid overfitting.

Inconsistent random seeds when comparing model performance. Use

torch.manual_seed()for reproducibility.

Being mindful of these issues helps prevent subtle bugs and ensures accurate, reproducible results.

Deployment Options for PyTorch Models

Once your model is trained, PyTorch supports various deployment strategies:

TorchScript: Allows you to convert models into a serializable format for optimized execution.

ONNX export: Useful for interoperability with platforms like TensorRT or mobile deployment.

PyTorch Mobile: Enables deployment on iOS and Android with native performance.

Flask/FastAPI: Wrap your model in a web API for scalable serving.

I’ve used it successfully in mobile apps and found ONNX helpful for deploying models in environments with strict performance requirements.

The PyTorch Ecosystem and Community

The PyTorch community is vast and developer-focused. Tools like:

Hugging Face Transformers

PyTorch Lightning

TorchMetrics

Captum for model interpretability

…extend its capabilities even further. Whether you’re building vision, NLP, or audio models, the open-source ecosystem saves time and enhances project quality.

Community forums, GitHub repositories, and detailed documentation make it easy to solve problems and discover best practices.

The Future of PyTorch

PyTorch continues to evolve rapidly. As of 2025, the PyTorch Foundation—part of the Linux Foundation—is guiding its development with long-term stability in mind. Upcoming features include:

Tighter JAX interoperability

Native support for graph neural networks

Expanded mobile and edge tooling

Better quantization for lightweight models

For developers, this means a framework that’s not just future-ready—but built for the future.

Final Thoughts

PyTorch has earned its place as one of the most developer-friendly deep learning frameworks available. It combines flexibility, readability, and power in a way that accelerates the entire machine learning lifecycle—from research to deployment.

As a developer, I’ve used it to solve real business problems, ship products faster, and learn more about AI along the way. With its vibrant ecosystem and continual updates, PyTorch remains a smart choice for any developer looking to build scalable, intelligent applications.